Hand gesture recognition using HandPose-OSC and Wekinator

The field of Human-Computer Interaction is moving towards gestures and it's therefore worth exploring how we can train a simple hand gesture recogniser using our webcams. In this quick tutorial, we'll teach a machine learning algorithm to know the difference between a flat hand, clenched fist and a "peace sign" - no code needed!

Getting started

Before we get started, we need to download two programs: HandPose OSC and Wekinator.

HandPose OSC

This program takes the webcam as input and passes the image stream through a neural network, which estimates the location and landmarks of a single hand and outputs the results as OSC, which is a network protocol used for connecting different programs.

Wekinator

Wekinator is a piece of software which has OSC as input and output. The user can demonstrate inputs and their desired outputs and then train a machine learning algorithm to a predict the desired output given an input. In our case, Wekinator will take landmarks from the different hand gestures and output the predicted gesture.

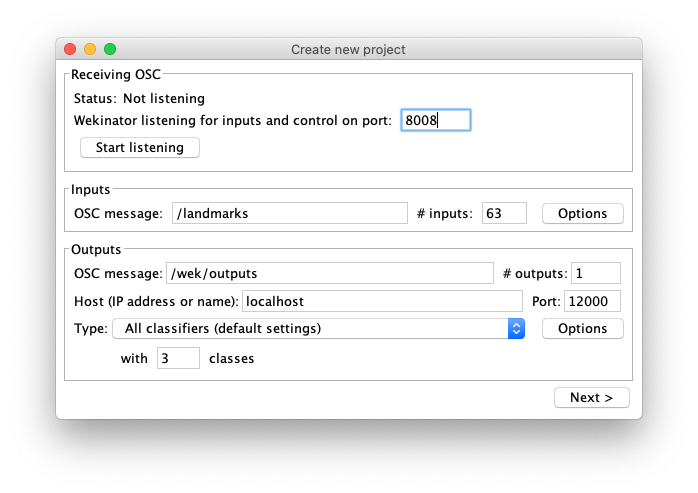

Configuring Wekinator

Open HandPose OSC and then open Wekinator. In Wekinator we need to set the following:

- input set to 8008 (which is the default output of HandPose OSC).

- input OSC message to /landmarks

- number of inputs to 63

- output number to 1

- outputs type to All classifiers (default settings)

- In the output type options you should select Support Vector Machine.

- output with 3 classes (flat hand (1), clenched fist (2) and "peace sign" (3)).

Bonus info: in this example we task the recognition as a classification tasks, which is why select "all classifiers" as output. Wekinator can, however, also tackle uses cases where the output is continuous, e.g. representing the level of clenching in a fist.

A screendump of Wekinator settings can be seen below:

You can now press Next > and we're ready to train the algorithm!

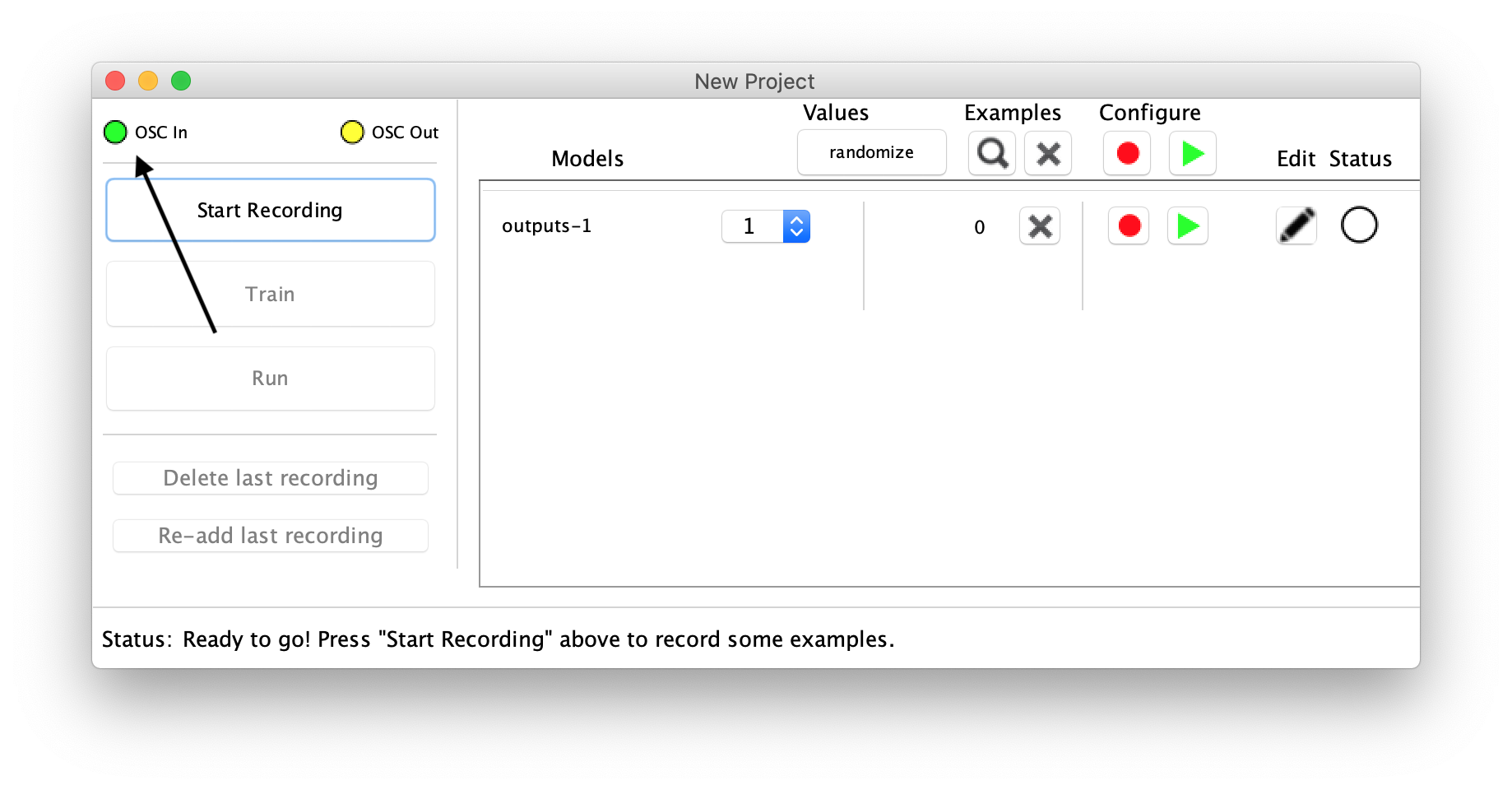

Training Wekinator

By now you should have HandPose OSC running and sending OSC to Wekinator. You can confirm that Wekinator is receiving OSC by checking that the circle in the upper left corner turns green when HandPose OSC is tracking your hand.

The procedure is now as follows:

- Select a desired class in the dropdown from handGesture-1. We represent flat hand as 1, clenched fist as 2 and "peace sign" as 3.

- Get ready to perform the selected gesture for HandPose OSC, which will track the landmarks.

- Press Start Recording. Wekinator will read the OSC output (landmarks) from HandPose OSC and observe these examples. Press Stop Recording after you have shown some variations of the given hand gesture.

- Repeat step 1, 2 and 3 for all the different hand gestures.

- Press train!

Step 2 and 3, where we perform and record a hand gesture.

Step 2 and 3, where we perform and record a hand gesture.

Using the trained model

After training the model, we can now use it to predict a new gesture! Simply press Run. Now when you perform hand gestures, it should change the number shown in the drop down menu, as it can be seen in the below animated gif:

A m a z i n g!

There's a high chance that Wekinator is not predicting the hand gestures as well as you've hoped. You can increase the robustness of the machine learning model by creating a larger diversity in the training set. This could be by e.g. training on both hands, rotating and varying the hand gesture.

Using the output

In a use-case such as the one described in this tutorial, Wekinator is merely a link to some other software. Wekinator outputs OSC and can thereby be used in a variety of different creative programming languages that take OSC as input such as processing, Max, openFrameworks, Unity, TouchDesigner, p5js and node.js.

One could e.g. make pong controlled by hand inputs, a gesture to emoji converter, hand drum machine, keyboard replacement, tamagotchi encouraged by thumbs up or something else! Let's get to gesturing!

Here's a quick example in Processing, where the color changes depending on the received osc messag:

import oscP5.*;

OscP5 oscP5;

color backgroundColor = color(0);

color[] backgroundColors = {color(255, 0, 0), color(0, 255, 0), color(0, 0, 255)};

void setup() {

size(400, 400);

oscP5 = new OscP5(this, 12000);

noStroke();

}

void draw() {

fill(backgroundColor, 10);

rect(0, 0, width, height);

}

void oscEvent(OscMessage theOscMessage) {

int oscArg = int(theOscMessage.get(0).floatValue());

backgroundColor = backgroundColors[oscArg-1];

}

Hope you've found this tutorial useful! You can send an email to frederik on tollund@ruc.dk if you need help at any step :)

FAQ

If you have problems running HandPose OSC, this might be due to the security settings of your OS. Try refering to these articles for windows, macOS or linux.