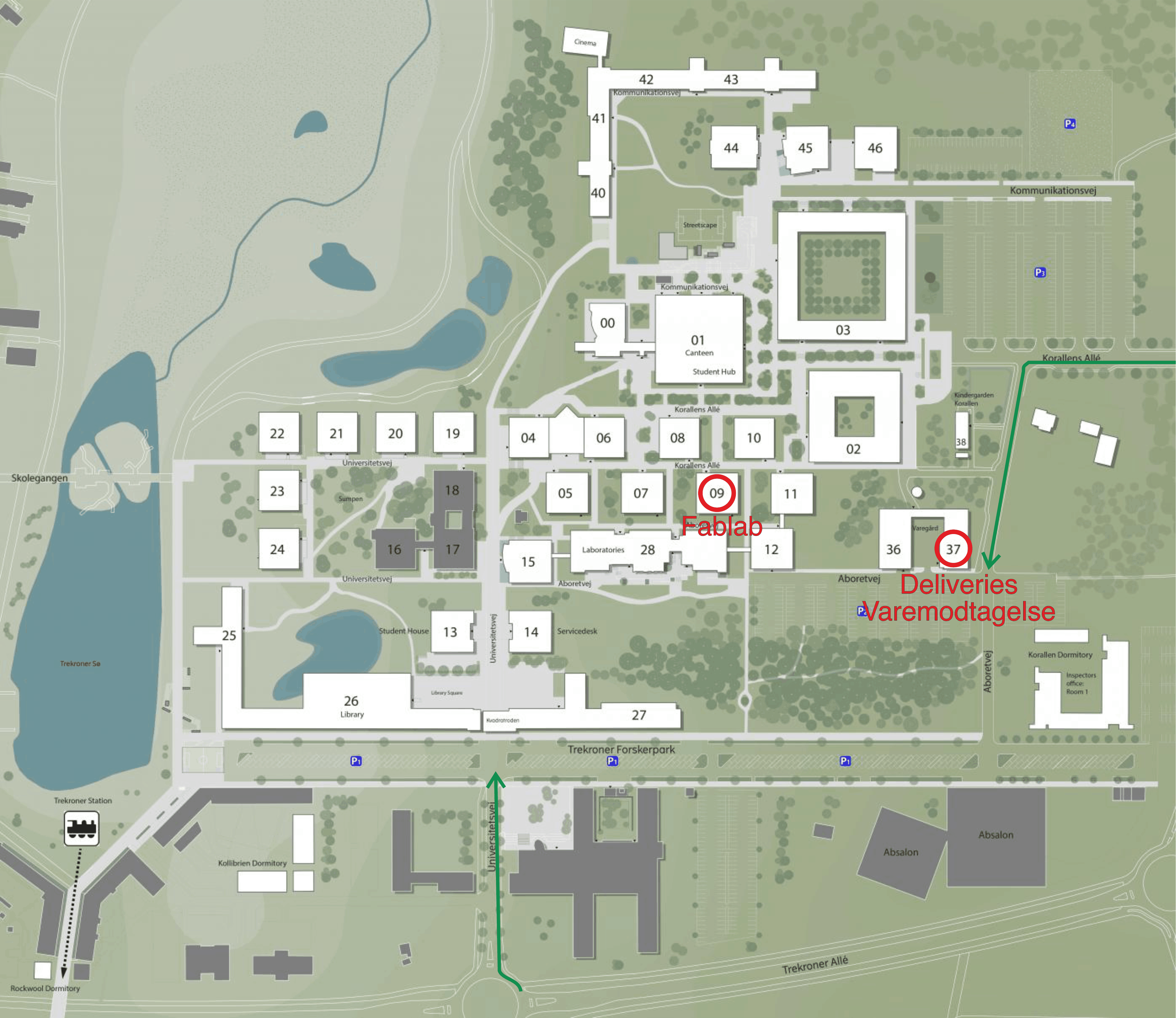

Face touch detection (almost) without code!

In these days not touching your face has become increasingly. important and places like MIT Media Lab are looking at ways of using technology to warn people if they are touching their face.

If you spend your time in insolation, you might have more time to train neural networks on your hands! In this short guide, we'll give a brief way of running and training face touch detection in the browser using a webcam and Google's Teachable Machine. Wash your hands thoroughly and let's start!

Creating the dataset

Go to https://teachablemachine.withgoogle.com/ and press Get Started and then select Image Project.

NOTE: The pose model doesn't work well with only the upper body. If you're not using a webcam on a laptop, but a camera which is further from your body, you might have success starting a pose project instead.

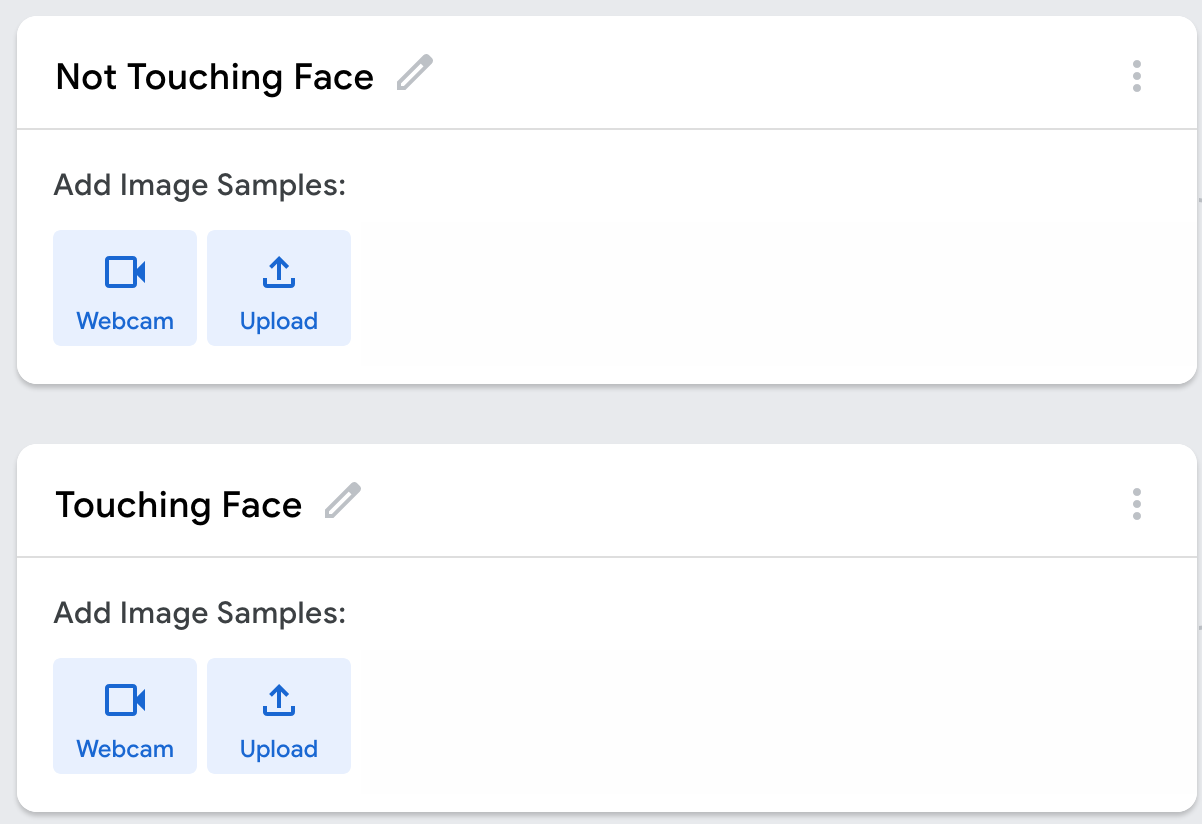

You will now see two classes and we will name these Touching Face and Not Touching Face.

We now have our two categories and can start adding images. The easiest way to do this is using the webcam.

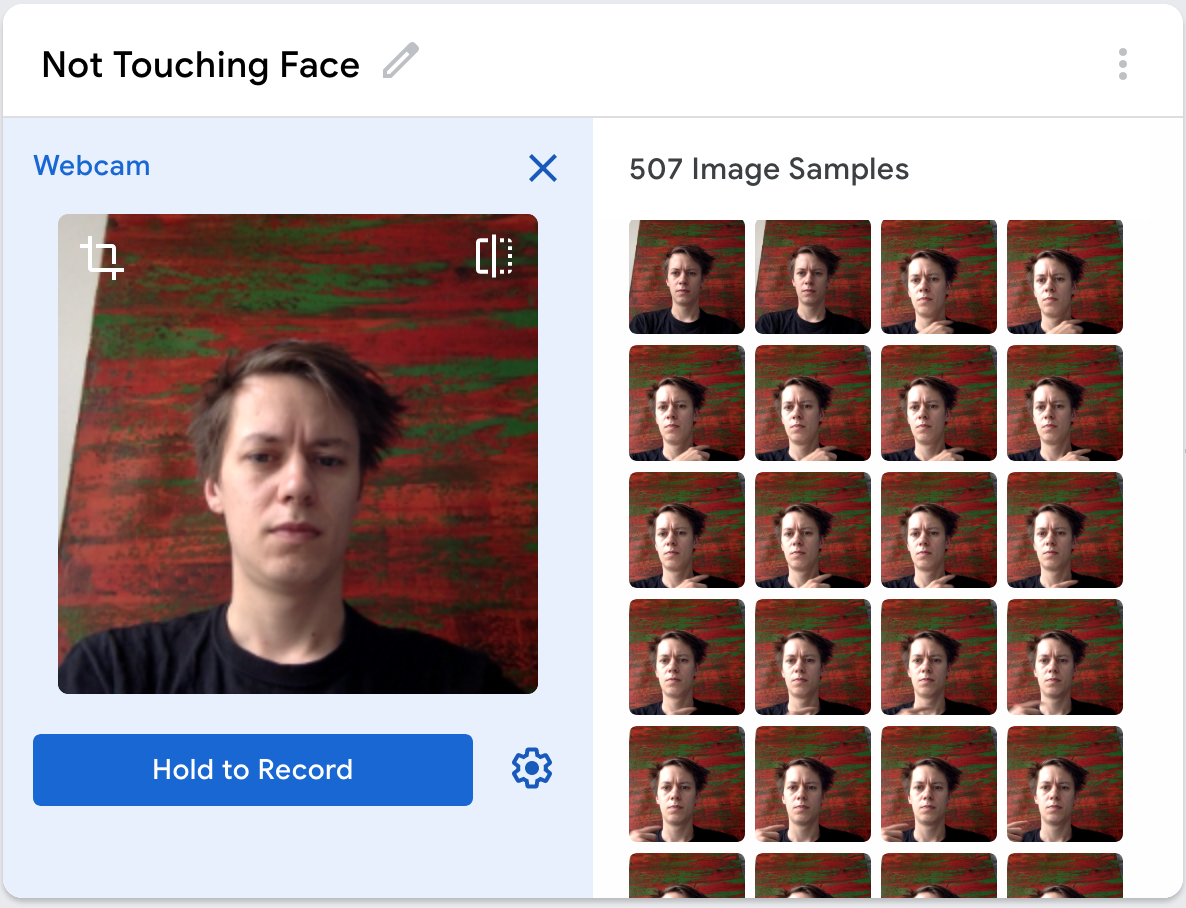

To increase the versatility and the accuracy of the model, it could be fruitful to have images in different amounts of lighting and contrast, angles, distances to the camera, wearing different hats and moving around a bit. For the Touching Face-category, this could include touching your face in many different ways.

Keep this in mind and press Hold to Record and take a lot of pictures of your face without touching it:

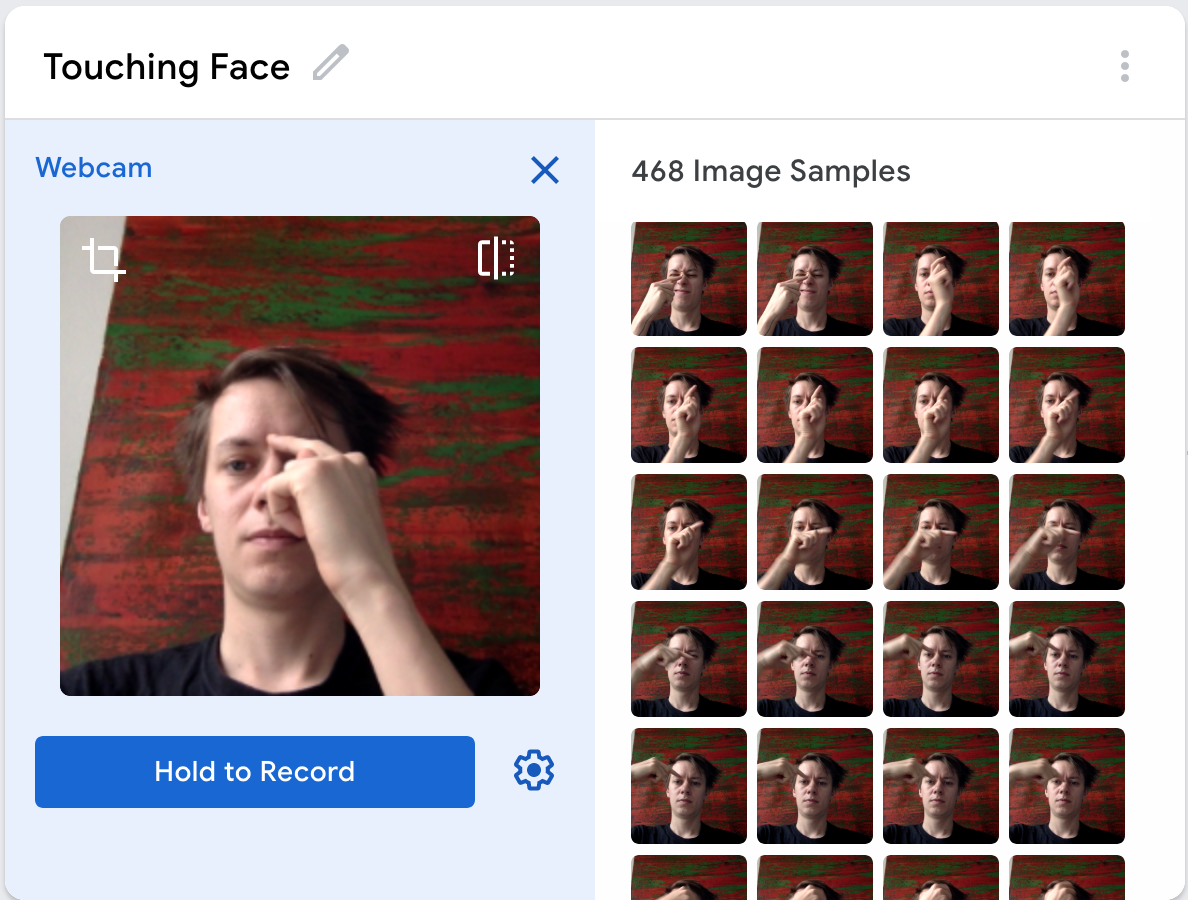

Similarly we record data for the Touching Face, but instead with images of you touching or almost touching your face.

and that's it!

Training the model

This step is easy.. Press Train Model to start training the neural network. This is training in on your computer, so you might hear the fans spinning quite a bit. Note that the tab in your browser has to be active for the training to continue.

After training the model, you should be able to preview with your webcam!

Using the model

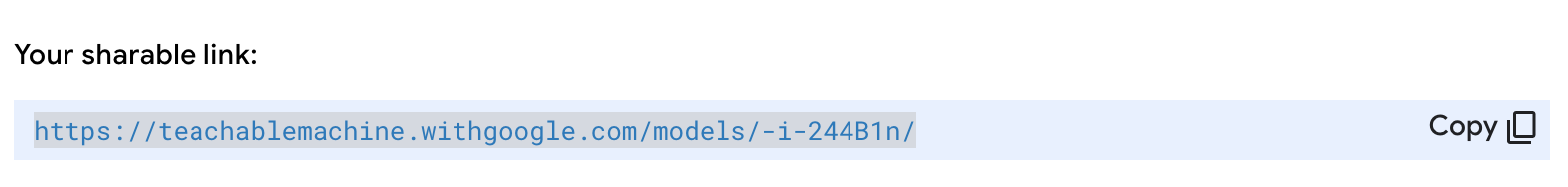

You can now export the trained model by pressing Export Model in the preview tab. It get's a bit more complex here, but for our use we want to choose Tensorflow.js and uploading with a shareable link. Make sure these settings are selected and press Upload my Model. After it's done uploading, you can copy it's url:

You could now do all kinds of stuff with this model using Tensorflow.js. We've made a quick sketch using p5js, where you can test your model in your browser.

Open this link and you'll see a bunch of Javascript on the left-side. Replace the URL in line 2 with the url you just obtained before and press the Play-button in the upper left corner.

Voila! You now have a dead simple browser tab running, which will warn you with sound if you are touching your face!

Taking things further:

By training the model on different images, you could do do object recognition! If you for example find it difficult to see the difference between soybeans and chickpeas or yams and sweet potatoes, this is your moment.

By modifying the provided code, you could make a fun party game where your scores increases the longer you abstain from touching your face. Great!

Stay safe, stay at home and send a mail to Frederik at tollund@ruc.dk if you have questions regarding this tutorial.